How Temporal Transforms Engineering Research into an Intelligent Multi-Agent System with Guaranteed Execution

Executive Summary

A leading engineering services firm faced chronic inefficiencies from fragmented research processes, manual task decomposition, and siloed domain expertise across frontend, backend, deployment, and architecture teams. Their legacy approach relied on unstructured workflows that produced inconsistent technical recommendations and lacked systematic quality control mechanisms.

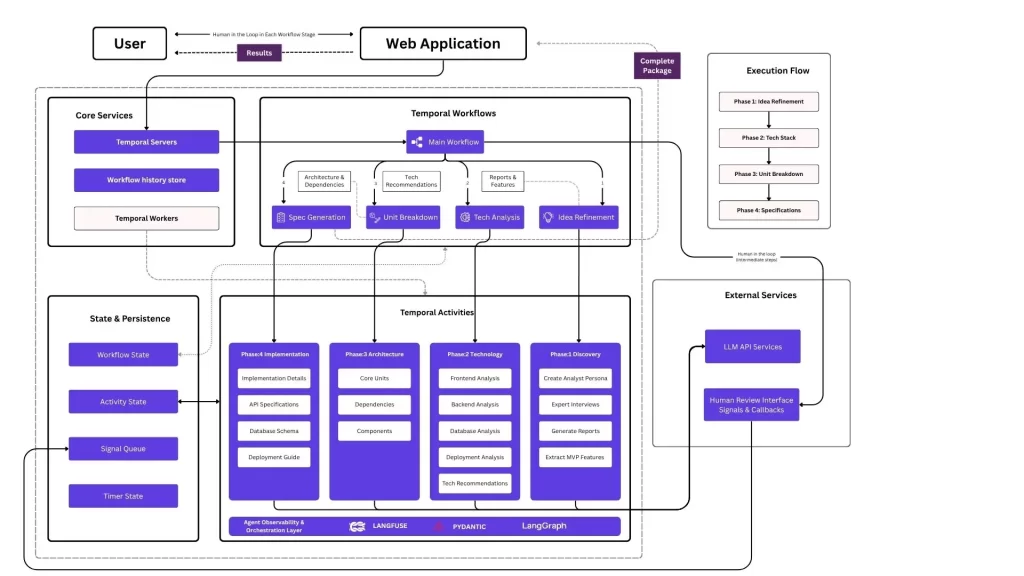

Xgrid’s Solution: By orchestrating a Temporal-powered multi-agent research platform with specialized AI agents, LLM integration, and human-in-the-loop validation workflows, Xgrid delivered guaranteed execution across all engineering research phases. The result: structured technical specifications, automated task decomposition, and reliable AI-generated recommendations with built-in quality control.

The Challenge

Unstructured Engineering Research Process: Teams researched architecture, implementation, and technology decisions across fragmented tools, resulting in inconsistent analysis, missed considerations, and duplicated effort across projects.

Lack of Systematic Task Decomposition: Breaking complex engineering projects into actionable specifications, units, and dependencies required extensive manual effort prone to gaps, inconsistencies, and incomplete coverage.

Siloed Technical Domain Expertise: Organizations couldn’t efficiently coordinate frontend, backend, deployment, and architectural analysis, requiring multiple specialists and creating extended timelines and communication overhead.

Limited Output Quality Control: Teams lacked structured mechanisms for validating, refining, and incorporating human expertise into AI-generated technical recommendations, creating risks of suboptimal specifications.

The Solution: Intelligent Research Operations with Temporal

1. Multi-Agent Orchestration Framework

Coordinates specialized AI agents across research, analysis, and design phases with durable workflows ensuring comprehensive coverage and consistent execution even during long-running operations.

2. Phased Workflow Execution Pipeline

Structures engineering research into sequential phases from data refinement through specifications with state management, progress tracking, and automatic checkpointing for complex projects.

3. Specialized Sub-Agent Architecture

Deploys domain-specific agents for implementation details, architecture components, technology analysis, and discovery, enabling parallel specialized research across technical domains.

4. LLM API Integration Layer

Leverages external language model services through standardized APIs with Pydantic validation ensuring structured, type-safe technical content generation and consistency.

5. Security Guardrails Framework

RBAC monitoring and access controls ensure secure, auditable execution of scheduled tasks and AI research processes with comprehensive activity logging.

6. Human Review Interface System

Expert validation workflows with Temporal signals/callbacks enable asynchronous review and approval of AI-generated technical specifications and recommendations.

7. Agent Observability Platform

Monitors workflow state and agent interactions through LangBase orchestration with LangFuse tracing for debugging, performance optimization, and quality assurance.

Implementation Highlights

| Phase | Key Deliverable |

|---|---|

| Requirements & Architecture | Multi-agent workflow design, LLM integration planning, human-in-loop patterns |

| Agent Development | Research agents, analysis agents, specification generators with LangGraph orchestration |

| Workflow Integration | Temporal workflows, phased execution pipeline, state management layer |

| LLM & RAG Setup | External API integration, Pydantic validation, knowledge base configuration |

| Human Review Workflows | Callback mechanisms, approval interfaces, expert validation patterns |

| Security & Observability | RBAC implementation, LangFuse monitoring, audit logging |

| Production Deployment | Agent pool scaling, workflow optimization, performance tuning |

Results: Guaranteed Research Execution

Operational Reliability

- Workflow Completion: 99.99% success rate with automatic retry mechanisms for LLM API calls

- Agent Coordination: Zero workflow failures during multi-agent research operations

- Automated Recovery: Temporal workflows resume automatically after API rate limits or network issues

Process Efficiency

- Research Time: Reduced from days to hours through parallel agent execution

- Specification Quality: Dramatically improved through structured validation and human review

- Knowledge Consistency: Unified technical recommendations across all engineering domains

- Manual Effort: Expert time focused on high-value review instead of manual research

Technical Performance

- Agent Orchestration: Seamless coordination of 10+ specialized research agents

- LLM Integration: Sub-second API response handling with intelligent retry logic

- State Management: Persistent workflow state enabling pause/resume of complex research

Operational Outcomes

Zero Manual Recovery: Temporal guarantees completion for all research workflows and specification generation tasks.

Structured Research Process: Phased pipeline transforms unstructured research into systematic, repeatable technical outputs.

Domain Expertise Coordination: Multi-agent architecture efficiently coordinates frontend, backend, deployment, and architecture analysis in parallel.

Quality-Controlled AI Output: Human review workflows ensure expert validation of AI-generated technical recommendations before finalization.

Scalable Research Capacity: Agent-based architecture scales research capabilities without proportional increase in specialist headcount.

Comprehensive Observability: Real-time monitoring provides visibility into agent interactions, LLM calls, and workflow progression.

Lessons Learned

Agent orchestration is critical because coordinating multiple specialized AI agents requires durable workflow patterns to prevent partial completions.

Human-in-the-loop builds trust through asynchronous approval workflows that validate AI recommendations without blocking execution.

Temporal workflows eliminate polling by enabling event-driven agent coordination with guaranteed state persistence across long-running research.

Structured validation prevents drift through Pydantic schemas ensuring consistent, type-safe technical outputs from LLM responses.

Observability drives optimization by identifying agent bottlenecks, LLM performance issues, and workflow inefficiencies through comprehensive tracing.

Start with high-value domains such as architecture analysis and technology selection to demonstrate immediate ROI before expanding agent coverage.

Looking Ahead

✅ Expanded Agent Ecosystem: Additional specialized agents for security analysis, cost optimization, and performance benchmarking

✅ Enhanced RAG Integration: Vector database knowledge retrieval for context-aware technical recommendations

✅ Multi-Model Orchestration: Parallel LLM execution with intelligent model selection based on task requirements

✅ Automated Testing Workflows: Agent-driven test generation and validation for technical specifications

✅ Continuous Learning Pipeline: Feedback loops incorporating human review outcomes to improve agent performance

The Xgrid Advantage

✅ Guaranteed Workflow Completion: Temporal ensures every research task finishes, even with LLM API failures

✅ Zero Agent Coordination Failures: Durable orchestration preserves state across all agent interactions

✅ Accelerated Research Cycles: Parallel agent execution reduces specification time from days to hours

✅ Full Quality Control: Human review workflows validate AI outputs before production use

✅ Scalable Intelligence: Multi-agent architecture expands research capacity without linear cost growth

✅ Comprehensive Observability: Real-time visibility into agent activities and workflow progression

We turned unstructured engineering research from manual guesswork into intelligent, guaranteed execution.

With Temporal, every research query completes, every specification gets validated, and no engineering team operates without structured technical guidance. This wasn’t achieved by hoping AI agents coordinate perfectly, but by designing for durability and human oversight.